Hadoop开启Yarn的日志监控功能

创始人

2024-05-25 20:46:29

0次

1.开启JobManager日志

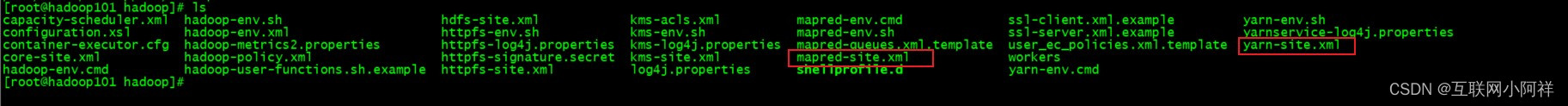

(1)编辑NameNode配置文件${hadoop_home}/etc/hadoop/yarn-site.xml和mapred-site.xml

- 编辑yarn-site.xml

yarn.nodemanager.aux-services mapreduce_shuffle yarn.log-aggregation-enable true yarn.log-aggregation.retain-seconds 10080 yarn.nodemanager.remote-app-log-dir /flink/log yarn.log.server.url http://localhost:19888/jobhistory/logs mapreduce.jobhistory.intermediate-done-dir /history/done_intermediate mapreduce.jobhistory.done-dir /history/done - 编辑mapred-site.xml

mapreduce.framework.name yarn

mapreduce.jobhistroy.address hadoop101:10020

mapreduce.jobhistroy.webapp.address hadoop101:19888

#复制配置文件到集群的其他机器

scp mapred-site.xml 用户@IP地址:/目标机器文件夹路径

scp yarn-site.xml 用户@IP地址:/目标机器文件夹路径

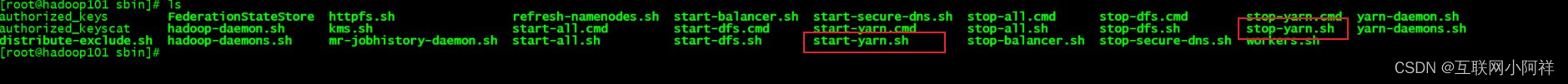

(3)重启yarn,重启历史服务

./stop-yarn.sh && ./start-yarn.sh

#进入到hadoop的安装目录

cd ${hadoophome}/hadoop/sbin

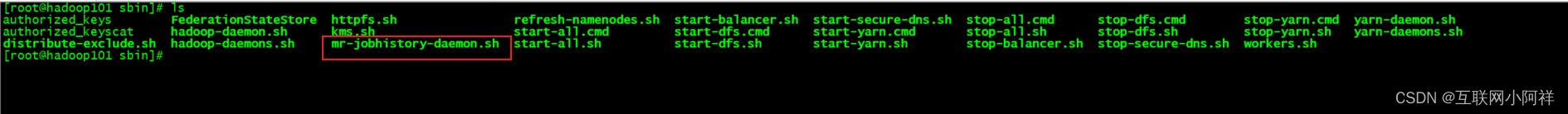

kill -9 117681 && ./mr-jobhistory-daemon.sh start historyserver

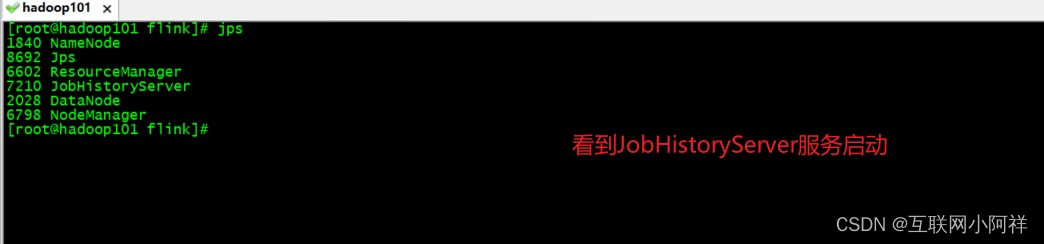

(4)查看服务运行情况

jps

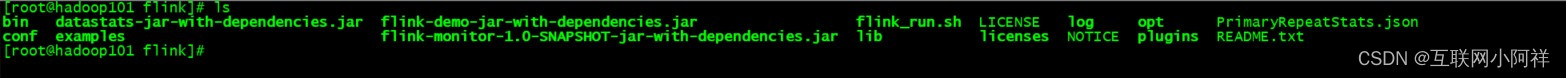

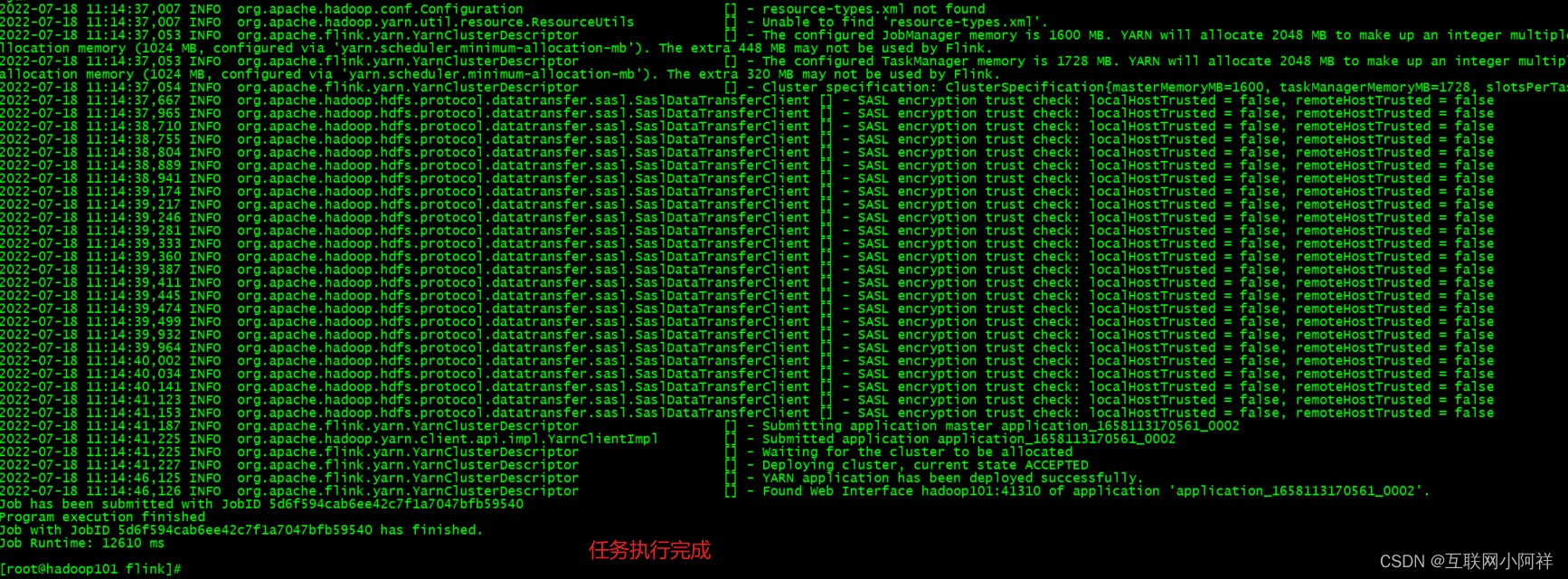

(5)运行flink on yarn

./bin/flink run -m yarn-cluster -c com.lixiang.app.FlinkDemo ./flink-demo-jar-with-dependencies.jar

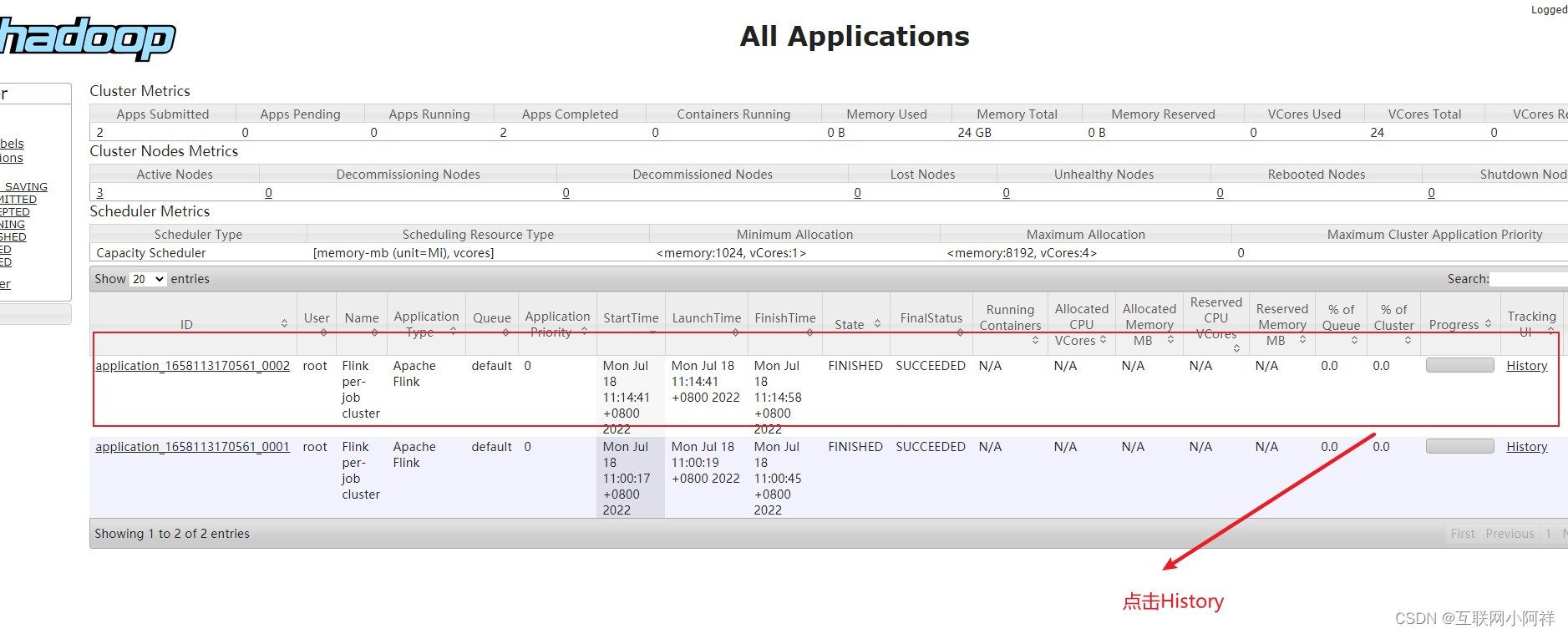

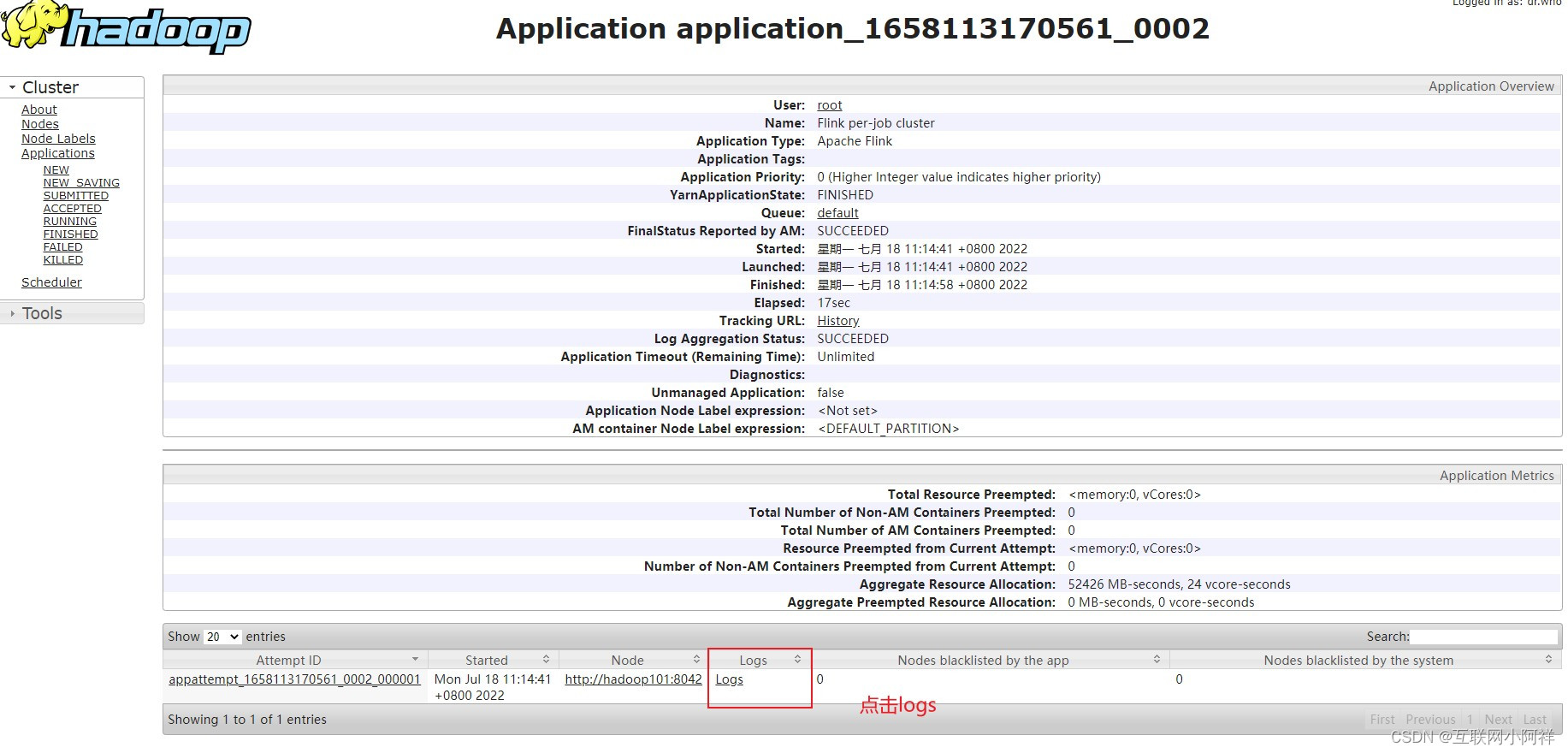

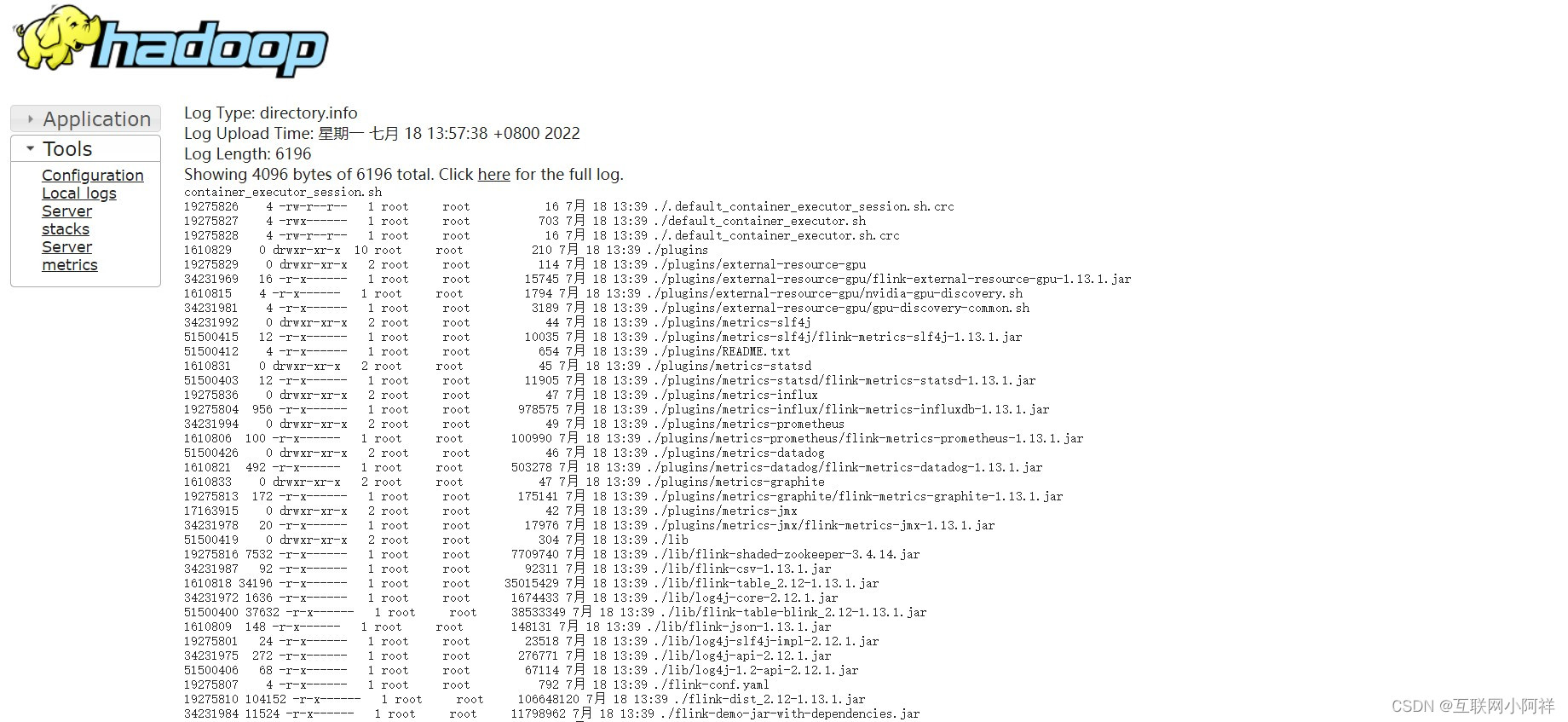

(6)查看hadoop控制台

2.开启TaskManager日志

javax.jdo.option.ConnectionURL jdbc:mysql://192.168.139.101:3306/metastore?useSSL=false javax.jdo.option.ConnectionDriverName com.mysql.jdbc.Driver javax.jdo.option.ConnectionUserName root hive.metastore.warehouse.dir /user/hive/warehouse javax.jdo.option.ConnectionPassword 123456 hive.metastore.schema.verification false hive.metastore.event.db.notification.api.auth false hive.cli.print.current.db true hive.cli.print.header true hive.server2.thrift.bind.host ip hive.server2.thrift.port 10000 CREATE EXTERNAL TABLE tweetsCOMMENT "A table backed by Avro data with the Avro schema embedded in the CREATE TABLE statement"ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.avro.AvroSerDe'STORED ASINPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerInputFormat'OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerOutputFormat'LOCATION '/user/hive/warehouse'TBLPROPERTIES ('avro.schema.literal'='{"type": "record","name": "Tweet","namespace": "com.miguno.avro","fields": [{ "name":"username", "type":"string"},{ "name":"tweet", "type":"string"},{ "name":"timestamp", "type":"long"}]}');insert into tweets values('zhaoliu','Hello word',13800000000);select * from tweets;//建立外部 schema

CREATE EXTERNAL TABLE avro_test1

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.avro.AvroSerDe'

STORED AS

INPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerOutputFormat'

LOCATION '/user/tmp'

TBLPROPERTIES (

'avro.schema.url'='hdfs:///user/hive/warehouse/student.avsc'

);{"type":"record","name":"student","namespace":"com.tiejia.avro","fields":[{"name":"SID","type":"string","default":""},{"name":"Name","type":"string","default":""},{"name":"Dept","type":"string","default":""},{"name":"Phone","type":"string","default":""},{"name":"Age","type":"string","default":""},{"name":"Date","type":"string","default":""}]

}"type": "record","name": "Tweet","namespace": "com.miguno.avro","fields": [{"name": "username","type": "string"},{"name": "tweet","type": "string"},{"name": "timestamp","type": "long"}]

}CREATE EXTERNAL TABLE tweets

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.avro.AvroSerDe'

STORED AS

INPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerOutputFormat'

LOCATION '/user/tmp'

TBLPROPERTIES (

'avro.schema.url'='hdfs:///user/hive/warehouse/tweets.avsc'

);

o.AvroSerDe’

STORED AS

INPUTFORMAT ‘org.apache.hadoop.hive.ql.io.avro.AvroContainerInputFormat’

OUTPUTFORMAT ‘org.apache.hadoop.hive.ql.io.avro.AvroContainerOutputFormat’

LOCATION ‘/user/tmp’

TBLPROPERTIES (

‘avro.schema.url’=‘hdfs:///user/hive/warehouse/tweets.avsc’

);

上一篇:charles+夜神模拟器抓包

下一篇:Java 基础面试题——关键字

相关内容

热门资讯

保存时出现了1个错误,导致这篇...

当保存文章时出现错误时,可以通过以下步骤解决问题:查看错误信息:查看错误提示信息可以帮助我们了解具体...

汇川伺服电机位置控制模式参数配...

1. 基本控制参数设置 1)设置位置控制模式 2)绝对值位置线性模...

不能访问光猫的的管理页面

光猫是现代家庭宽带网络的重要组成部分,它可以提供高速稳定的网络连接。但是,有时候我们会遇到不能访问光...

不一致的条件格式

要解决不一致的条件格式问题,可以按照以下步骤进行:确定条件格式的规则:首先,需要明确条件格式的规则是...

本地主机上的图像未显示

问题描述:在本地主机上显示图像时,图像未能正常显示。解决方法:以下是一些可能的解决方法,具体取决于问...

表格列调整大小出现问题

问题描述:表格列调整大小出现问题,无法正常调整列宽。解决方法:检查表格的布局方式是否正确。确保表格使...

表格中数据未显示

当表格中的数据未显示时,可能是由于以下几个原因导致的:HTML代码问题:检查表格的HTML代码是否正...

Android|无法访问或保存...

这个问题可能是由于权限设置不正确导致的。您需要在应用程序清单文件中添加以下代码来请求适当的权限:此外...

【NI Multisim 14...

目录 序言 一、工具栏 🍊1.“标准”工具栏 🍊 2.视图工具...

银河麒麟V10SP1高级服务器...

银河麒麟高级服务器操作系统简介: 银河麒麟高级服务器操作系统V10是针对企业级关键业务...