k8s + rook + Ceph 记录

创始人

2024-06-02 19:00:40

0次

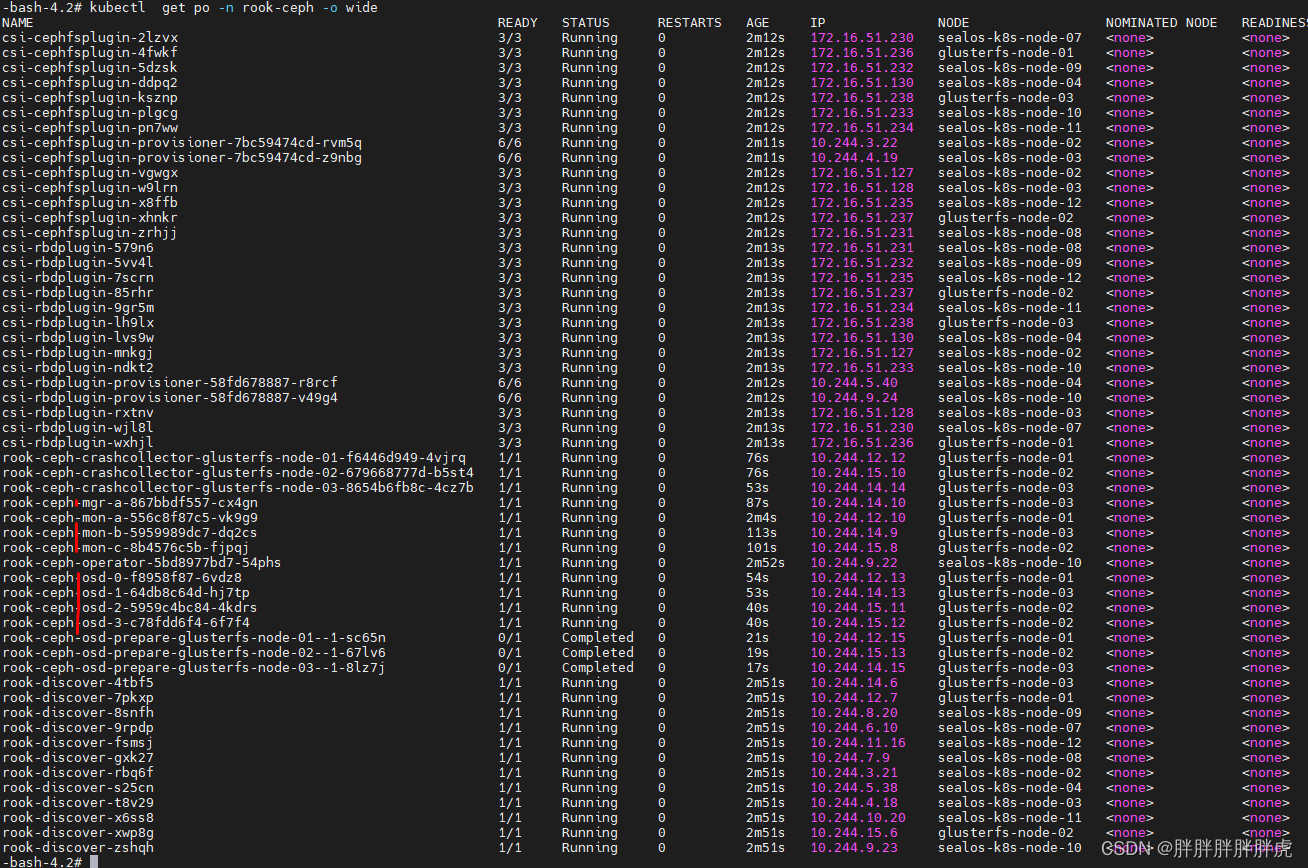

k8s 部署 ceph

git clone git@github.com:rook/rook.git --single-branch --branch v1.6.11

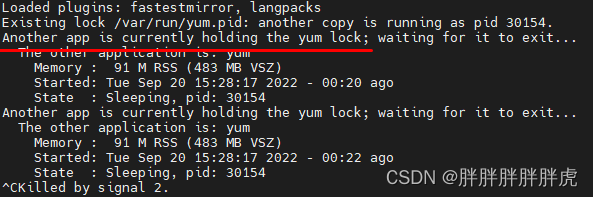

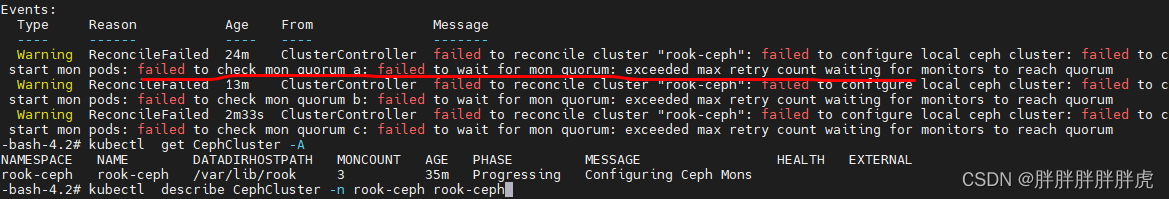

failed to reconcile cluster "rook-ceph": failed to configure local ceph cluster: failed to create cluster: failed to start ceph monitors: failed to start mon pods: failed to check mon quorum a: failed to wait for mon quorum: exceeded max retry count waiting for monitors to reach quorum

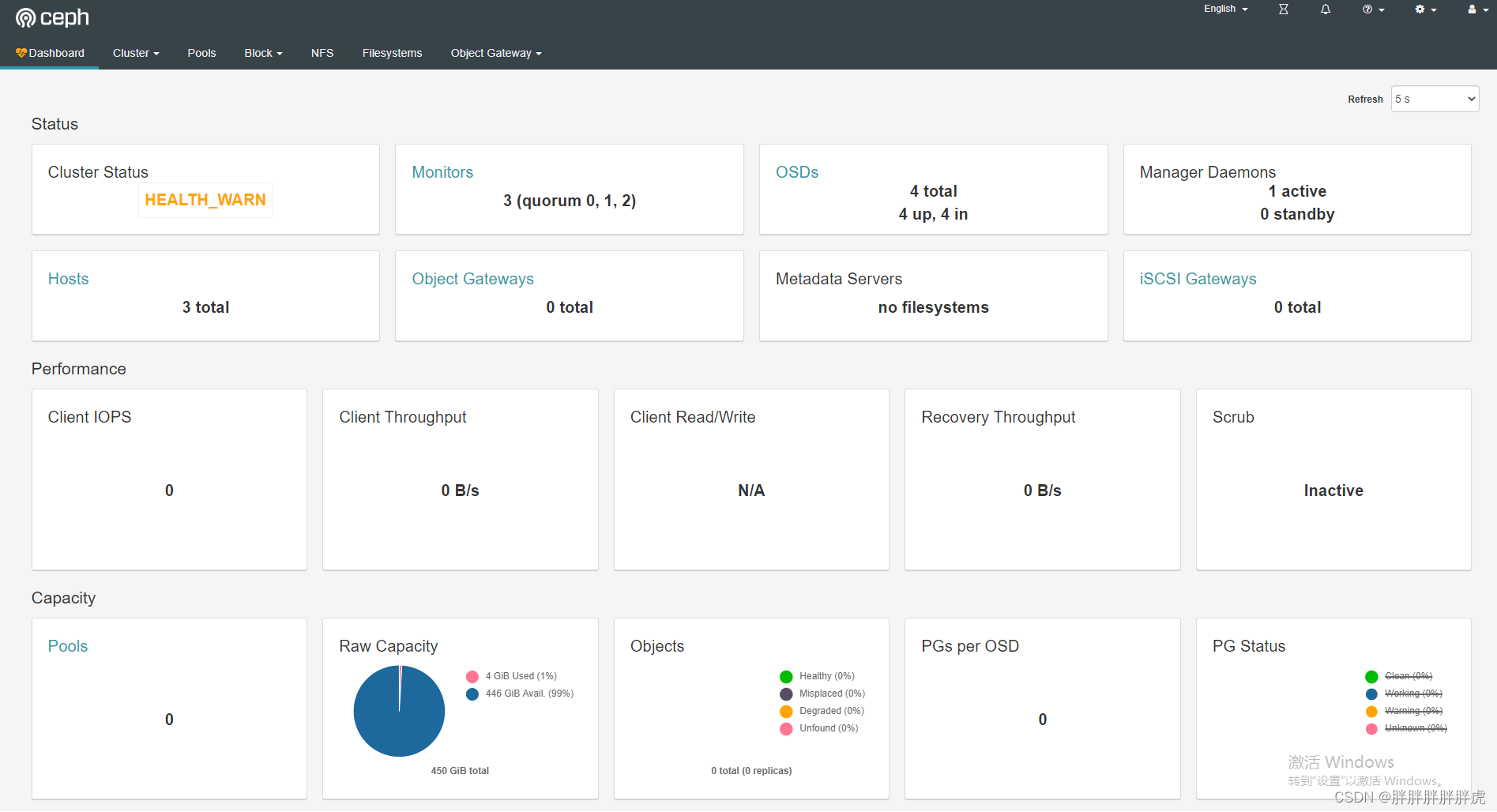

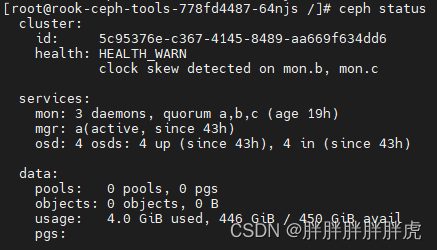

sh-4.4# ceph statuscluster:id: 5c95376e-c367-4145-8489-aa669f634dd6health: HEALTH_WARNclock skew detected on mon.b, mon.cservices:mon: 3 daemons, quorum a,b,c (age 39m)mgr: a(active, since 38m)osd: 4 osds: 4 up (since 38m), 4 in (since 38m)data:pools: 0 pools, 0 pgsobjects: 0 objects, 0 Busage: 4.0 GiB used, 446 GiB / 450 GiB availpgs:sh-4.4#

operator.yaml

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-hfj6rwLh-1678689461977)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\image-20220920182256321.png)]](/uploadfile/202406/39fb1d14be4eae9.png)

注意关闭:ROOK_ENABLE_DISCOVERY_DAEMON 自动发现新增磁盘

# Whether to enable the flex driver. By default it is enabled and is fully supported, but will be deprecated in some future release# in favor of the CSI driver.ROOK_ENABLE_FLEX_DRIVER: "false"# Whether to start the discovery daemon to watch for raw storage devices on nodes in the cluster.# This daemon does not need to run if you are only going to create your OSDs based on StorageClassDeviceSets with PVCs.ROOK_ENABLE_DISCOVERY_DAEMON: "true"# Enable volume replication controllerCSI_ENABLE_VOLUME_REPLICATION: "false"

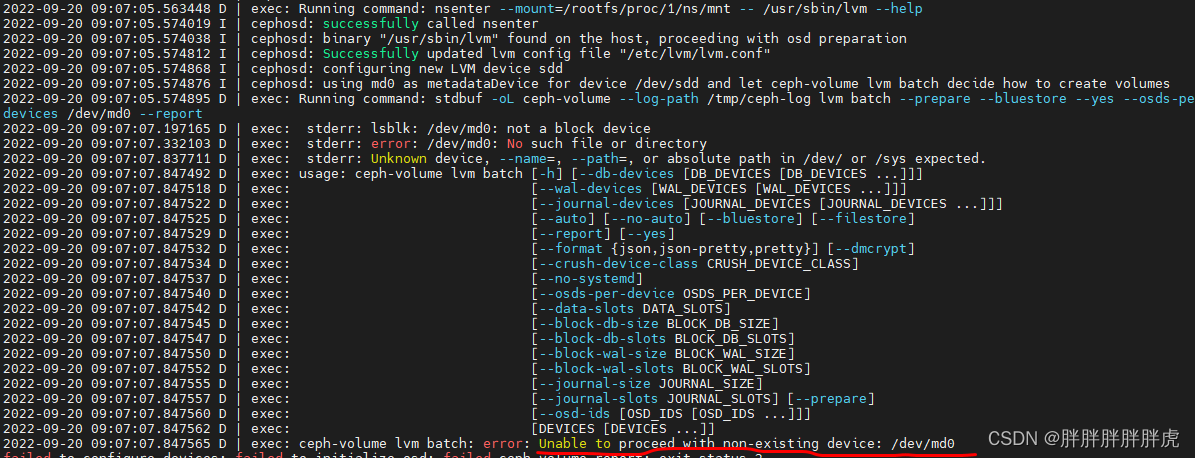

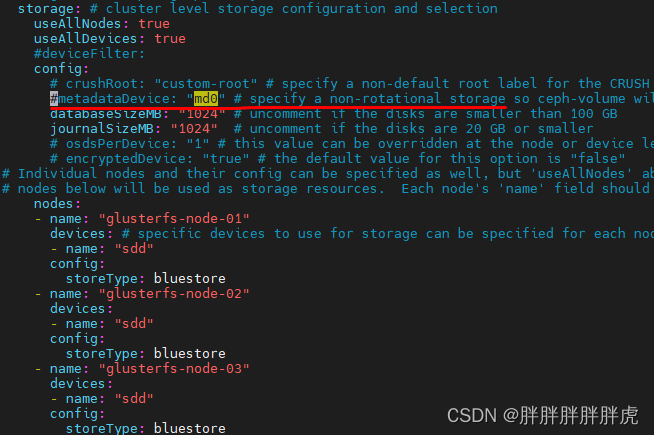

storage: # cluster level storage configuration and selectionuseAllNodes: trueuseAllDevices: true#deviceFilter:config:# crushRoot: "custom-root" # specify a non-default root label for the CRUSH map#metadataDevice: "md0" # specify a non-rotational storage so ceph-volume will use it as block db device of bluestore.databaseSizeMB: "1024" # uncomment if the disks are smaller than 100 GBjournalSizeMB: "1024" # uncomment if the disks are 20 GB or smaller# osdsPerDevice: "1" # this value can be overridden at the node or device level# encryptedDevice: "true" # the default value for this option is "false"

# Individual nodes and their config can be specified as well, but 'useAllNodes' above must be set to false. Then, only the named

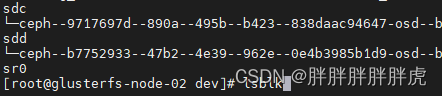

# nodes below will be used as storage resources. Each node's 'name' field should match their 'kubernetes.io/hostname' label.nodes:- name: "glusterfs-node-01"devices: # specific devices to use for storage can be specified for each node- name: "sdd"config:storeType: bluestore- name: "glusterfs-node-02"devices:- name: "sdd"config:storeType: bluestore- name: "glusterfs-node-03"devices:- name: "sdd"config:storeType: bluestore

dashboard 用户:admin

-bash-4.2# kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}"| base64 --decode && echo

U/"]r=X317>W5cY]Mg#&

问题

mon clock skew detected .

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-WWz17sa6-1678689461979)(C:\Users\Administrator\AppData\Roaming\Typora\typora-user-images\image-20220920185625755.png)]](/uploadfile/202406/346573ec09f55ae.png)

命令

ceph mon dump

ceph集群id,监控主机名和ip地址及端口号,版本信息和最新更改信息

# ceph mon dump

epoch 3

fsid 5c95376e-c367-4145-8489-aa669f634dd6

last_changed 2022-09-20 09:37:05.219208

created 2022-09-20 09:36:43.892436

min_mon_release 14 (nautilus)

0: [v2:10.1.5.237:3300/0,v1:10.1.5.237:6789/0] mon.a

1: [v2:10.1.94.89:3300/0,v1:10.1.94.89:6789/0] mon.b

2: [v2:10.1.181.36:3300/0,v1:10.1.181.36:6789/0] mon.c

dumped monmap epoch 3ceph status

# ceph statuscluster:id: 5c95376e-c367-4145-8489-aa669f634dd6health: HEALTH_WARNclock skew detected on mon.b, mon.cservices:mon: 3 daemons, quorum a,b,c (age 44m)mgr: a(active, since 44h)osd: 4 osds: 4 up (since 44h), 4 in (since 44h)data:pools: 1 pools, 32 pgsobjects: 6 objects, 36 Busage: 4.0 GiB used, 446 GiB / 450 GiB availpgs: 32 active+clean

ceph df

# ceph df

RAW STORAGE:CLASS SIZE AVAIL USED RAW USED %RAW USEDhdd 450 GiB 446 GiB 12 MiB 4.0 GiB 0.89TOTAL 450 GiB 446 GiB 12 MiB 4.0 GiB 0.89POOLS:POOL ID PGS STORED OBJECTS USED %USED MAX AVAILreplicapool 1 32 36 B 6 384 KiB 0 140 GiB

ceph osd pool ls

# ceph osd pool ls

replicapool

ceph osd tree

# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.43939 root default

-3 0.04880 host glusterfs-node-010 hdd 0.04880 osd.0 up 1.00000 1.00000

-7 0.34180 host glusterfs-node-02# 300G2 hdd 0.29300 osd.2 up 1.00000 1.00000# 50G 3 hdd 0.04880 osd.3 up 1.00000 1.00000

-5 0.04880 host glusterfs-node-031 hdd 0.04880 osd.1 up 1.00000 1.00000### osd的 weight 权重与磁盘大小相关

### 0.0488 * 6 = 0.2928

ceph pg dump

# ceph pg dump

version 2270

stamp 2022-09-23 03:02:24.365166

last_osdmap_epoch 0

last_pg_scan 0

PG_STAT OBJECTS MISSING_ON_PRIMARY DEGRADED MISPLACED UNFOUND BYTES OMAP_BYTES* OMAP_KEYS* LOG DISK_LOG STATE STATE_STAMP VERSION REPORTED UP UP_PRIMARY ACTING ACTING_PRIMARY LAST_SCRUB SCRUB_STAMP LAST_DEEP_SCRUB DEEP_SCRUB_STAMP SNAPTRIMQ_LEN

3.17 0 0 0 0 0 0 0 0 22 22 active+clean 2022-09-22 09:27:15.314136 37'22 48:81 [2,0,1] 2 [2,0,1] 2 0'0 2022-09-22 09:27:14.575801 0'0 2022-09-22 09:27:14.575801 0

2.16 0 0 0 0 0 0 0 0 0 0 active+clean 2022-09-22 09:27:10.517141 0'0 48:27 [1,2,0] 1 [1,2,0] 1 0'0 2022-09-22 09:27:10.425347 0'0 2022-09-22 09:27:10.425347 0

1.15 0 0 0 0 0 0 0 0 55 55 active+clean 2022-09-22 08:13:52.711649 37'55 48:118 [2,1,0] 2 [2,1,0] 2 0'0 2022-09-22 06:05:40.406435 0'0 2022-09-22 06:05:40.406435 0

...

08:13:52.711778 37'47 48:132 [2,0,1] 2 [2,0,1] 2 0'0 2022-09-22 06:05:40.406435 0'0 2022-09-22 06:05:40.406435 03 2 0 0 0 0 316 0 0 467 467

2 26 0 0 0 0 445002 0 0 2936 2936

1 19 0 0 0 0 21655588 0 0 2083 2083sum 47 0 0 0 0 22100906 0 0 5486 5486

OSD_STAT USED AVAIL USED_RAW TOTAL HB_PEERS PG_SUM PRIMARY_PG_SUM

3 19 MiB 49 GiB 1.0 GiB 50 GiB [0,1,2] 23 16

2 16 MiB 299 GiB 1.0 GiB 300 GiB [0,1,3] 73 54

1 24 MiB 49 GiB 1.0 GiB 50 GiB [0,2,3] 96 13

0 24 MiB 49 GiB 1.0 GiB 50 GiB [1,2,3] 96 13

sum 84 MiB 446 GiB 4.1 GiB 450 GiB* NOTE: Omap statistics are gathered during deep scrub and may be inaccurate soon afterwards depending on utilisation. See http://docs.ceph.com/docs/master/dev/placement-group/#omap-statistics for further details.

dumped allrados lspools

# rados lspools

replicapool

myfs-metadata

myfs-data0

ceph osd pool ls

# ceph osd pool ls

creplicapool

# rbd ls replicapool

csi-vol-c2b0deff-3a3c-11ed-8414-4a357c53876e# ceph osd pool ls

replicapool

myfs-metadata

myfs-data0

# ceph fs ls

name: myfs, metadata pool: myfs-metadata, data pools: [myfs-data0 ]

# rbd ls replicapool

csi-vol-0755cfdc-3a5b-11ed-bbef-ae19c7afbaba

csi-vol-c2b0deff-3a3c-11ed-8414-4a357c53876e# 创建镜像

rbd create --image-feature layering --size 10G -bash-4.2# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

...

pvc-18333ee4-a693-4864-a0ec-588260b41779 5Gi RWO Delete Bound default/volume-fe-doris-fe-0 rook-ceph-block 26m

...-bash-4.2# kubectl describe pv pvc-18333ee4-a693-4864-a0ec-588260b41779

Name: pvc-18333ee4-a693-4864-a0ec-588260b41779

Labels:

Annotations: pv.kubernetes.io/provisioned-by: rook-ceph.rbd.csi.ceph.com

Finalizers: [kubernetes.io/pv-protection]

StorageClass: rook-ceph-block

Status: Bound

Claim: default/volume-fe-doris-fe-0

Reclaim Policy: Delete

Access Modes: RWO

VolumeMode: Filesystem

Capacity: 5Gi

Node Affinity:

Message:

Source:Type: CSI (a Container Storage Interface (CSI) volume source)Driver: rook-ceph.rbd.csi.ceph.comFSType: ext4VolumeHandle: 0001-0009-rook-ceph-0000000000000001-0755cfdc-3a5b-11ed-bbef-ae19c7afbabaReadOnly: falseVolumeAttributes: clusterID=rook-cephcsi.storage.k8s.io/pv/name=pvc-18333ee4-a693-4864-a0ec-588260b41779csi.storage.k8s.io/pvc/name=volume-fe-doris-fe-0csi.storage.k8s.io/pvc/namespace=defaultimageFeatures=layeringimageFormat=2imageName=csi-vol-0755cfdc-3a5b-11ed-bbef-ae19c7afbabajournalPool=replicapoolpool=replicapoolstorage.kubernetes.io/csiProvisionerIdentity=1663838031040-8081-rook-ceph.rbd.csi.ceph.com

Events:

-bash-4.2################ rook ceph tools ###############[root@rook-ceph-tools-778fd4487-64njs /]# ceph osd pool ls

replicapool

myfs-metadata

myfs-data0### 以上 rbd 的创建卷

[root@rook-ceph-tools-778fd4487-64njs /]# rbd ls replicapool

csi-vol-0755cfdc-3a5b-11ed-bbef-ae19c7afbaba

csi-vol-c2b0deff-3a3c-11ed-8414-4a357c53876e

[root@rook-ceph-tools-778fd4487-64njs /]# rados -p replicapool ls

rbd_data.2673f7a3881f6.0000000000000231

rbd_data.2673f7a3881f6.0000000000000205

rbd_data.2673f7a3881f6.0000000000000004

rbd_directory

...

rbd_header.2673f7a3881f6

rbd_info

...

csi.volume.c2b0deff-3a3c-11ed-8414-4a357c53876e

rbd_data.2673f7a3881f6.0000000000000202

csi.volumes.default

rbd_data.2673f7a3881f6.0000000000000200

rbd_id.csi-vol-c2b0deff-3a3c-11ed-8414-4a357c53876e

csi.volume.0755cfdc-3a5b-11ed-bbef-ae19c7afbaba

rbd_data.2673f7a3881f6.0000000000000203

rbd_data.1fe298ffc0c91.00000000000000a0

rbd_header.1fe298ffc0c91

rbd_data.1fe298ffc0c91.00000000000000ff

...

rbd_id.csi-vol-0755cfdc-3a5b-11ed-bbef-ae19c7afbaba

rbd_data.2673f7a3881f6.0000000000000400

rbd_data.2673f7a3881f6.0000000000000120

rbd_data.2673f7a3881f6.0000000000000360

[root@rook-ceph-tools-778fd4487-64njs /]# rados -p replicapool ls | grep csi-vol-0755cfdc-3a5b-11ed-bbef-ae19c7afbaba

rbd_id.csi-vol-0755cfdc-3a5b-11ed-bbef-ae19c7afbaba

[root@rook-ceph-tools-778fd4487-64njs /]# rados -p replicapool listomapvals rbd_directory

id_1fe298ffc0c91

value (48 bytes) :

00000000 2c 00 00 00 63 73 69 2d 76 6f 6c 2d 63 32 62 30 |,...csi-vol-c2b0|

00000010 64 65 66 66 2d 33 61 33 63 2d 31 31 65 64 2d 38 |deff-3a3c-11ed-8|

00000020 34 31 34 2d 34 61 33 35 37 63 35 33 38 37 36 65 |414-4a357c53876e|

00000030######## 查看卷元数据

id_2673f7a3881f6

value (48 bytes) :

00000000 2c 00 00 00 63 73 69 2d 76 6f 6c 2d 30 37 35 35 |,...csi-vol-0755|

00000010 63 66 64 63 2d 33 61 35 62 2d 31 31 65 64 2d 62 |cfdc-3a5b-11ed-b|

00000020 62 65 66 2d 61 65 31 39 63 37 61 66 62 61 62 61 |bef-ae19c7afbaba|

00000030name_csi-vol-0755cfdc-3a5b-11ed-bbef-ae19c7afbaba

value (17 bytes) :

00000000 0d 00 00 00 32 36 37 33 66 37 61 33 38 38 31 66 |....2673f7a3881f|

00000010 36 |6|

00000011name_csi-vol-c2b0deff-3a3c-11ed-8414-4a357c53876e

value (17 bytes) :

00000000 0d 00 00 00 31 66 65 32 39 38 66 66 63 30 63 39 |....1fe298ffc0c9|

00000010 31 |1|

00000011#### 查看image元数据有那些属性

[root@rook-ceph-tools-778fd4487-64njs /]# rados -p replicapool listomapvals rbd_header.2673f7a3881f6

access_timestamp

value (8 bytes) :

00000000 47 2e 2c 63 d2 b9 29 1d |G.,c..).|

00000008create_timestamp

value (8 bytes) :

00000000 47 2e 2c 63 d2 b9 29 1d |G.,c..).|

00000008features

value (8 bytes) :

00000000 01 00 00 00 00 00 00 00 |........|

00000008modify_timestamp

value (8 bytes) :

00000000 47 2e 2c 63 d2 b9 29 1d |G.,c..).|

00000008object_prefix

value (26 bytes) :

00000000 16 00 00 00 72 62 64 5f 64 61 74 61 2e 32 36 37 |....rbd_data.267|

00000010 33 66 37 61 33 38 38 31 66 36 |3f7a3881f6|

0000001a### 1<<0x16 = 4M,因此每个数据对象表示4M的范围。rbd_default_order 控制rados对象大小

order

value (1 bytes) :

00000000 16 |.|

00000001size

value (8 bytes) :

00000000 00 00 00 40 01 00 00 00 |...@....|

00000008snap_seq

value (8 bytes) :

00000000 00 00 00 00 00 00 00 00 |........|

00000008[root@rook-ceph-tools-778fd4487-64njs /]#

### 资源池数据导出至pool_centent

rados -p replicapool export pool_centent

pool

# 创建pool

ceph osd pool create

# 设置pool 副本为2

ceph osd pool set size 2# 删除pool

ceph osd pool set --yes-i-really-really-mean-it# rados df

POOL_NAME USED OBJECTS CLONES COPIES MISSING_ON_PRIMARY UNFOUND DEGRADED RD_OPS RD WR_OPS WR USED COMPR UNDER COMPR

myfs-data0 384 KiB 2 0 6 0 0 0 1058 529 KiB 6 4 KiB 0 B 0 B

myfs-metadata 3 MiB 26 0 78 0 0 0 144884 72 MiB 4447 2.9 MiB 0 B 0 B

replicapool 51 MiB 19 0 57 0 0 0 1367 8.4 MiB 538 118 MiB 0 B 0 Btotal_objects 47

total_used 4.1 GiB

total_avail 446 GiB

total_space 450 GiB

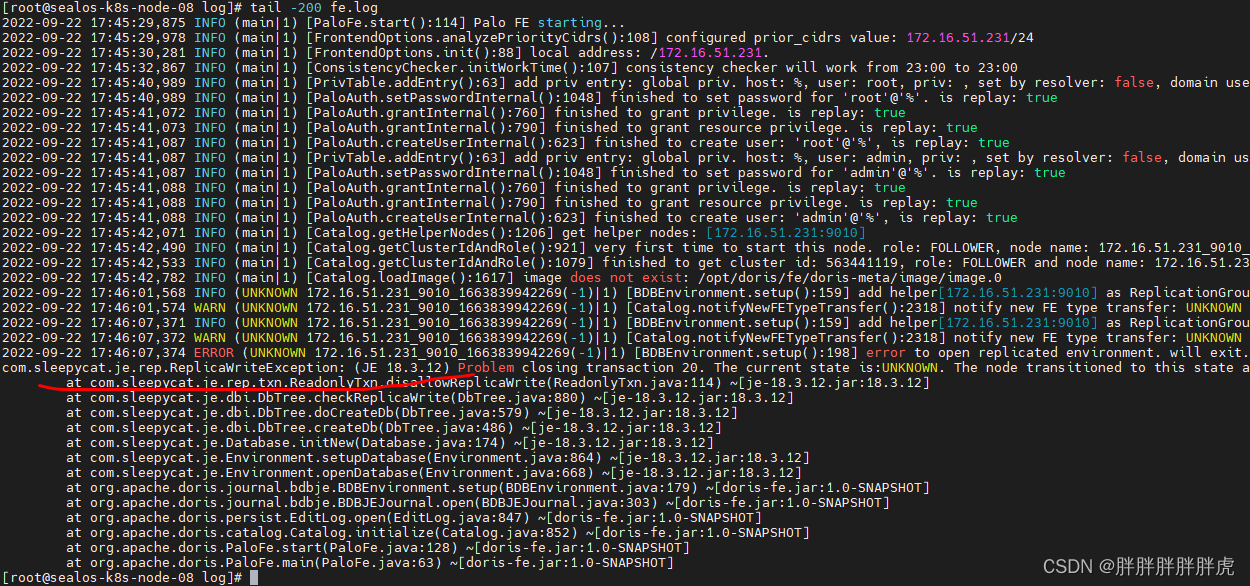

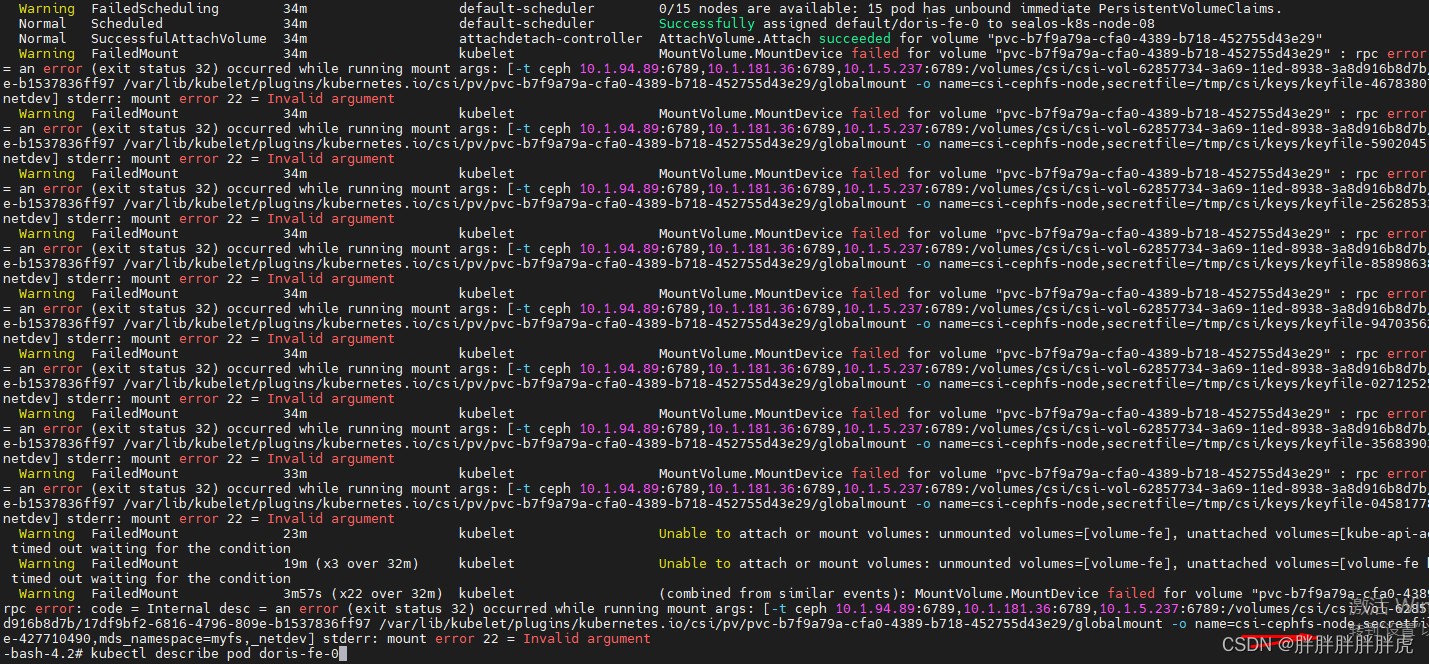

doris + ceph

rbd

ReadWriteOnce

ReadWriteMany

multi node access modes are only supported on rbd `block` type volumes

cephfs

0 144884 72 MiB 4447 2.9 MiB 0 B 0 B

replicapool 51 MiB 19 0 57 0 0 0 1367 8.4 MiB 538 118 MiB 0 B 0 Btotal_objects 47

total_used 4.1 GiB

total_avail 446 GiB

total_space 450 GiB

相关内容

热门资讯

保存时出现了1个错误,导致这篇...

当保存文章时出现错误时,可以通过以下步骤解决问题:查看错误信息:查看错误提示信息可以帮助我们了解具体...

汇川伺服电机位置控制模式参数配...

1. 基本控制参数设置 1)设置位置控制模式 2)绝对值位置线性模...

不能访问光猫的的管理页面

光猫是现代家庭宽带网络的重要组成部分,它可以提供高速稳定的网络连接。但是,有时候我们会遇到不能访问光...

不一致的条件格式

要解决不一致的条件格式问题,可以按照以下步骤进行:确定条件格式的规则:首先,需要明确条件格式的规则是...

本地主机上的图像未显示

问题描述:在本地主机上显示图像时,图像未能正常显示。解决方法:以下是一些可能的解决方法,具体取决于问...

表格列调整大小出现问题

问题描述:表格列调整大小出现问题,无法正常调整列宽。解决方法:检查表格的布局方式是否正确。确保表格使...

表格中数据未显示

当表格中的数据未显示时,可能是由于以下几个原因导致的:HTML代码问题:检查表格的HTML代码是否正...

Android|无法访问或保存...

这个问题可能是由于权限设置不正确导致的。您需要在应用程序清单文件中添加以下代码来请求适当的权限:此外...

【NI Multisim 14...

目录 序言 一、工具栏 🍊1.“标准”工具栏 🍊 2.视图工具...

银河麒麟V10SP1高级服务器...

银河麒麟高级服务器操作系统简介: 银河麒麟高级服务器操作系统V10是针对企业级关键业务...